Effective March 24, 2025, Microsoft and NVIDIA have announced several new advancements in their collaboration to accelerate AI development and performance. These changes include the integration of the newest NVIDIA Blackwell platform with Azure AI services infrastructure, the launch of NVIDIA NeMo Inference Microservices (NIM) in Azure AI Foundry, the launch of Serverless GPUs and more.

Changes

Integration of NVIDIA Blackwell Platform

The NVIDIA Blackwell Platform is a major advancement in AI and accelerated computing, and features the Blackwell GPU architecture. Compared to previous architectures like Hopper, Blackwell offers up to 2X the attention-layer acceleration and 1.5X more AI compute FLOPS. This makes it ideal for handling trillion-parameter AI models, which are crucial for generative AI, data processing, and engineering simulations.

The newest NVIDIA Blackwell platform is now integrated with Azure AI services infrastructure. This integration leverages NVIDIA’s advanced GPU technology to enhance AI performance and efficiency on Azure.

Launch of NVIDIA NIM Microservices

NVIDIA NIM Microservices help deploy AI applications quickly and reliably across different environments like cloud and data centers. They come with prebuilt AI models and are optimized for high performance and low latency. This makes it easier for companies to use AI without managing complex infrastructure.

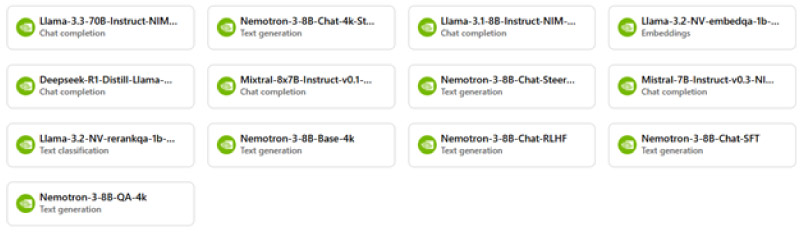

Azure AI Foundry now offers NVIDIA NIM microservices, helping developers quickly bring performance-optimized AI applications to market. The NVIDIA Llama Nemotron Reason open reasoning model will also soon be integrated into Azure AI Foundry.

Performance Optimization for Meta Llama Models

Meta’s Llama models are a family of large language models (LLMs) designed for various AI applications. Compared to Llama 3, the new Llama 3.1 offers extended context handling, improved inference speed, and enhanced multimodal support. They support multilingual and multimodal capabilities, allowing them to process both text and visual inputs.

Microsoft and NVIDIA now have optimized performance of Meta Llama models using TensorRT-LLM, improving throughput and efficiency.

General Availability of Azure ND GB200 V6 VMs

Azure ND GB200 V6 Virtual Machines are designed to provide powerful computing for AI and deep learning tasks.

- High-Performance Computing: These VMs use NVIDIA GB200 Blackwell GPUs, which are very advanced and fast. This makes them great for handling complex AI models and large datasets.

- Efficient Networking: They also use NVIDIA Quantum InfiniBand networking, which is a technology that allows very fast and low-latency communication between computers. This means data can be transferred quickly and efficiently.

- Scalability: These VMs can connect up to 72 GPUs in a single NVLink domain. NVLink is a high-speed connection that allows GPUs to work together seamlessly, providing even more power and efficiency.

- Improved Performance: Compared to older versions, these VMs offer significant improvements in both speed and efficiency. This ensures that demanding AI workloads run smoothly and quickly.

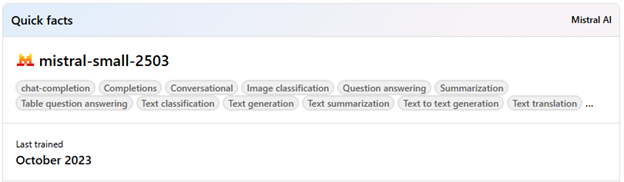

Expansion of Azure AI Foundry Model Catalog: Mistral Small 3.1

The Azure AI Foundry catalog now includes Mistral Small 3.1, featuring multimodal capabilities and extended context length.

This model excels in applications such as programming, reasoning, dialogue, and document analysis. Compared to previous versions, Mistral Small 3.1 offers improved text performance, multimodal understanding, and an expanded context window of up to 128,000 tokens.

Launch of Serverless GPUs

Serverless GPUs provide a scalable and flexible solution for running AI workloads on-demand without the need for dedicated infrastructure management. These GPUs are available in a serverless environment, allowing automatic scaling, optimized cold start, and per-second billing. Compared to traditional GPU setups, serverless GPUs offer the benefits of GPU acceleration while minimizing operational overhead and costs. This is important because it allows organizations to leverage powerful GPU resources for real-time inferencing, batch processing, and other dynamic AI applications without the complexity and expense of managing physical hardware.

Azure Container Apps now support serverless GPUs with NVIDIA NIM, which provides a cost-effective solution for running AI workloads on-demand.

More Information

Announcement: https://azure.microsoft.com/en-us/blog/microsoft-and-nvidia-accelerate-ai-development-and-performance/.

Visit Azure AI Foundry: https://ai.azure.com/.

Contact SCHNEIDER IT MANAGEMENT today for consultancy on Azure licensing in order to save costs on Azure and make use of the newest improvements in Azure.